Outline

- Asymptotically faster sorting.

- A fast O(n2) sort.

- A worst-case O(n log n) sort.

- A worst-case O(n) sort.

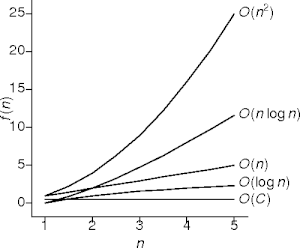

Faster Sorting

|

|

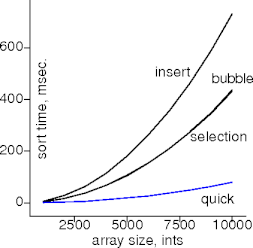

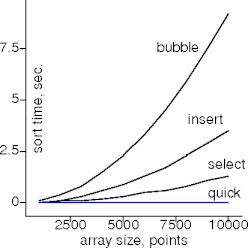

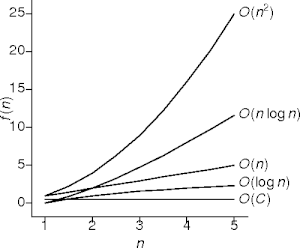

- The previous sorts were all O(n2).

- Can we do better?

- How much better can we do?

|

- Remember:

It’s faster only when it’s asymptotically faster.

A Question Reasked

- The question can’t be answered until the array’s completely sorted.

- Not quite:

- This answer doesn’t require sorting.

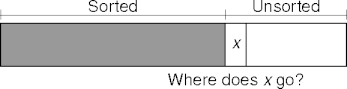

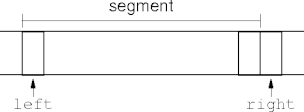

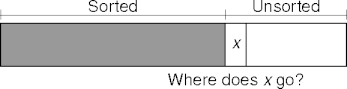

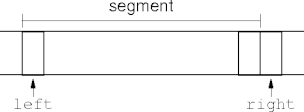

Partitioning

partition(T a[], int left, int right)

T pivot = a[left]

int l = left + 1

while l < right

if (a[l] ≤ pivot) l++

else if (pivot < a[right - 1]) right--

else swap(a[l++], a[--right])

swap(a[left], a[--l])

return l

So What?

- What good is partitioning?

- Repeatedly partitioning the array segments eventually sorts the array.

Quicksort

- And thus, Quicksort:

quicksort(T a[], int left, int right)

if right > 1 + left

int mid = partition(a, left, right)

quicksort(a, left, mid)

quicksort(a, mid + 1, right)

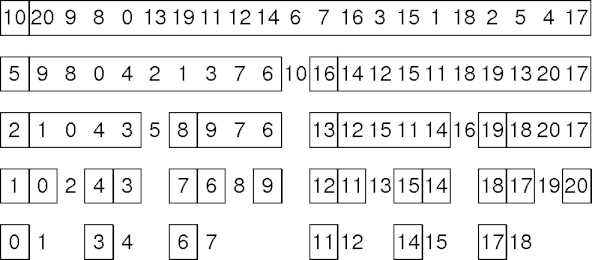

Quicksort Analysis.

- How much work does quicksort do?

Quicksort Analysis..

- The level i

partition()s do a total of O(n) work.

- N elements divide in “half” O(log n) times.

- Each half gets passed to

quicksort(), and so to partition().

- Remember, constants (2 here) don’t matter.

- O(log n) iterations, O(n) work per iteration.

- Quicksort sorts with O(n log n) work.

Oh Really?

No, Not Really

-

partition() may not divide into halves.

- The pivot may be an extreme value.

- This has two consequences:

-

partition() does little useful work.

- There are O(n) instead of O(log n) iterations.

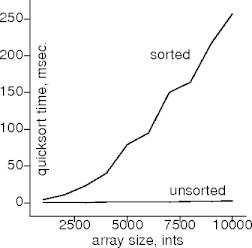

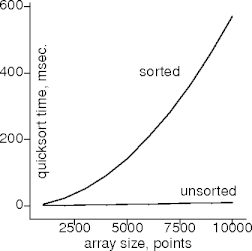

Worst-Case Quicksort

- Quicksort is now O(n2).

Is It?

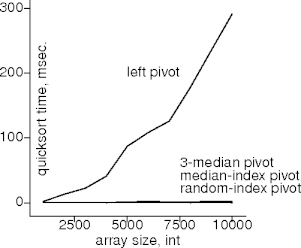

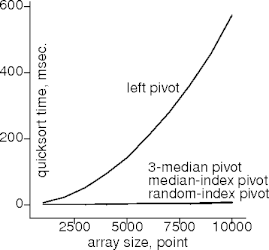

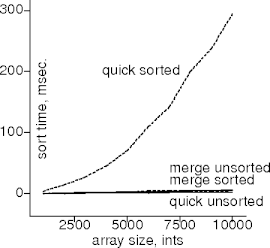

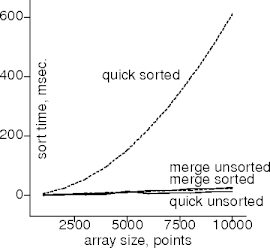

- Worst-case behavior occurs on mostly sorted data.

Avoiding Worst-Case Behavior

- Avoid worst-case quicksort behavior by

- Not using quicksort on sorted data.

- Randomly permuting the data.

- Making a more artful pivot-value choice.

Artful Pivot Choices

Do They Work?

- Worst-case behavior is still O(n2).

- But it has a low probability of occurrence.

Rigorous Halving

- Quicksort slows down when it can’t divide the data evenly.

- Consistent, even halving results in O(n log n) behavior.

- Is there a sort organized around consistent, even halving?

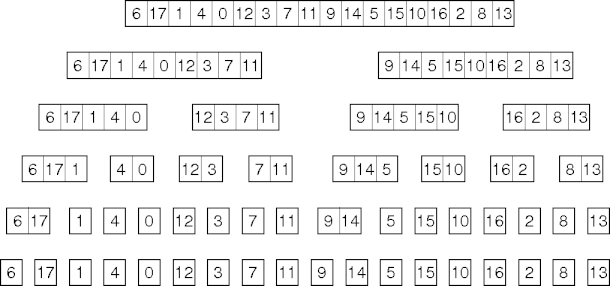

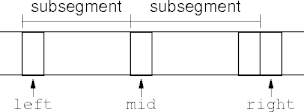

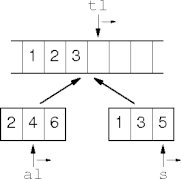

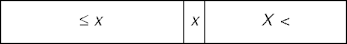

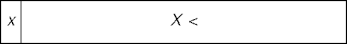

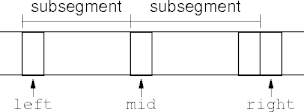

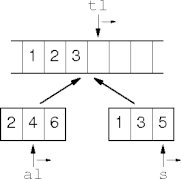

Divide to Conquer

- Now recurse on the two halves.

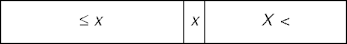

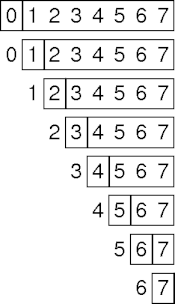

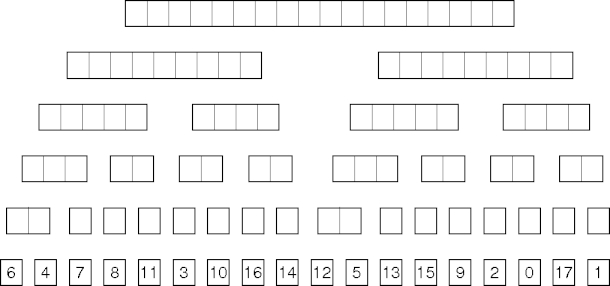

Mergesort Divison Example

- n = 18, levels = ⌈log 18⌉ = 5.

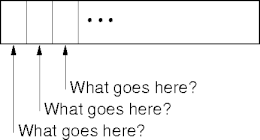

Repeated Halving

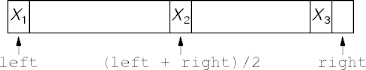

divide(T a[], int left, int right)

if right - left > 1

mid = (right + left)/2

divide(a, left, mid)

divide(a, mid, right)

Now What?

- Two objectives:

- Put everything back in the original array.

- Make sure it’s sorted in the original array.

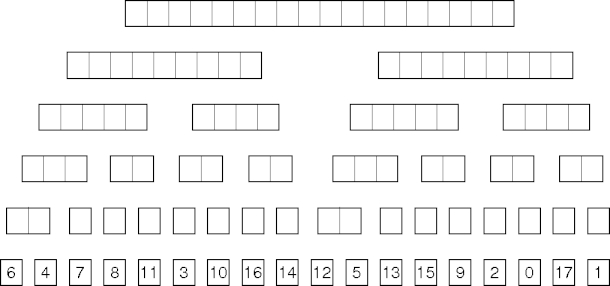

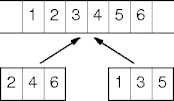

Reuniting Divisions

- A segment of size less than two is sorted.

- Two sorted subsegments can be merged back into the original

segment.

- And the original segment will be sorted too.

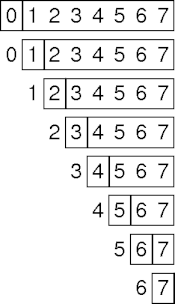

Merging

- Scan from left to right, copying the minimum value.

|

|

merge(T *

tl, tr, al, am, ar)

T * s = am

while tl < tr

if (al < am) ∧

(s == ar or *al < *s)

*tl++ = *al++

else

*tl++ = *s++

|

Mergesort

mergesort(T * aleft, aright, tleft, tright)

int len = aright - aleft

if len > 1

int h = len/2

T * amid = aleft + h

T * tmid = tleft + h

mergesort(aleft, amid, tleft, tmid)

mergesort(amid, aright, tmid, tright)

merge(

tleft, tright, aleft, amid, aright)

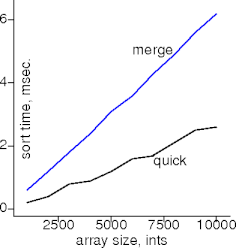

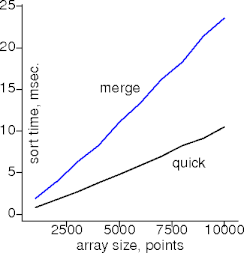

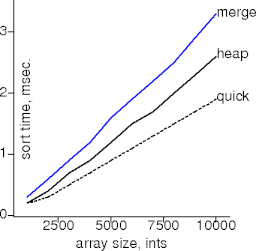

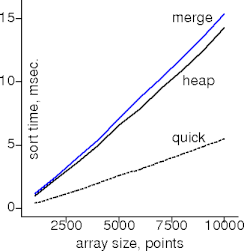

Performance

- The extra copying extracts a run-time cost.

Caveat Emptor

- What does O(n) extra space buy?

Sorting Again

- Remember this?

- A heap can answer these questions at a cost of O(log n) per question.

- That’s worst-case O(n log n) total.

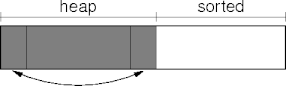

Sorting With A Heap

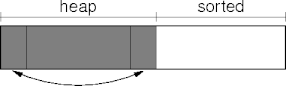

- Given an array of elements, heapify it.

- Remove the max heap element and store it in the open space.

Heapsort

heapsort(a[], n)

for i = 1 to n - 1

bubble-up(a, i)

for i = n - 1 to 1

swap(a[0], a[i])

bubble-down(i)

- The constants are worrisome.

Are They?

Some Problems

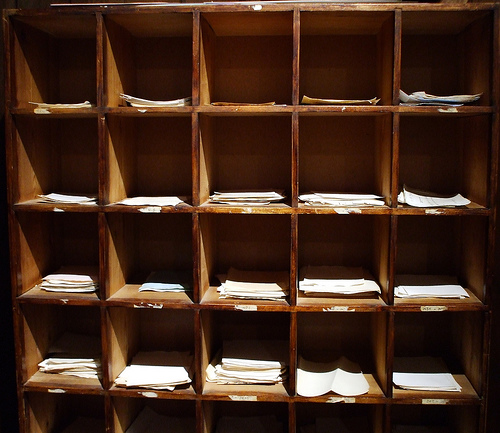

- AT&T handles about a billion phone calls per day.

- They all need to be recorded.

- How do you do it?

- The U.S. Post Office handles about

466 million pieces of mail a day.

- They all need to be routed.

- How do you do it?

The Big Idea

- In both cases, the keys are fixed-length numbers.

- A key can be re-interpreted as a base-M number.

- M is the radix.

- Two common values are M = 2i, i > 0, and M =

10.

- The key is decomposed into radix-M digits.

A Radix-10 Example

- To sort a set of three-decimal-digit numbers:

- Sort the 100s: 000 to 099, 100 to 199, ...

- Sort each 100s group by 10s: 00 to 09, 10 to 19, ...

- Sort each 10s group by 1s: 0 to 9.

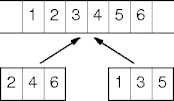

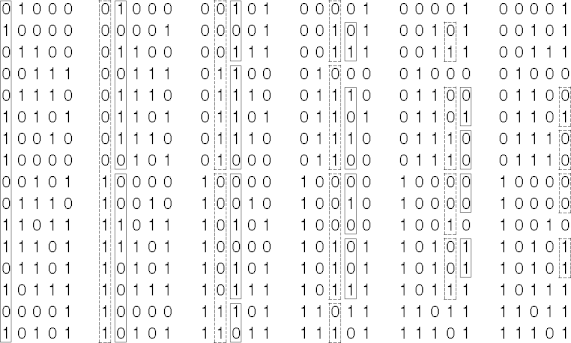

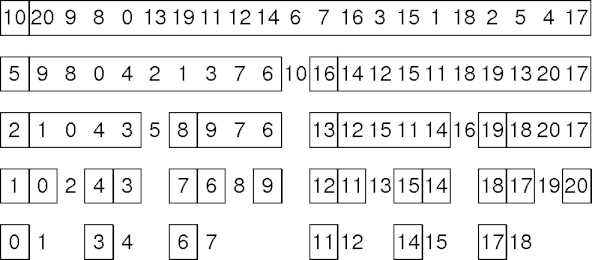

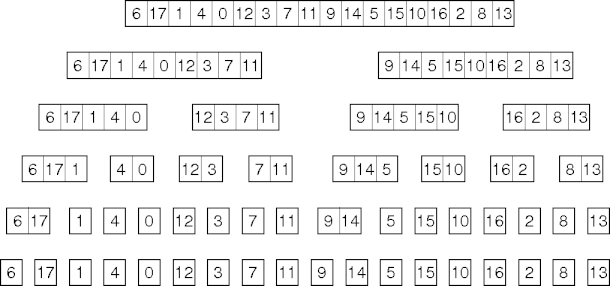

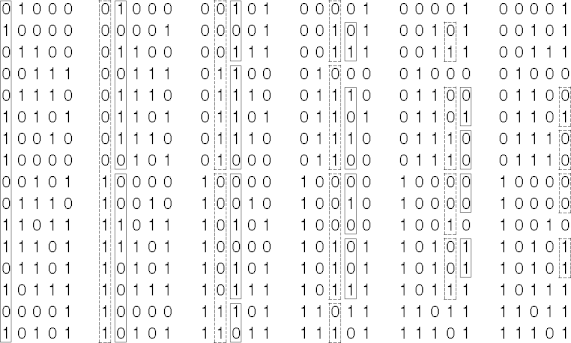

Radix Exchange Sort

- The previous example with radix-2 digits (binary) is called

radix exchange sorting.

- With only two digits, radix exchange sort is similar to quicksort.

- The partition segregates the ith 0 and 1 bits in the key.

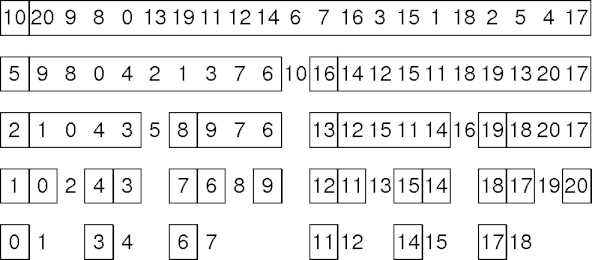

Example Radix Exchange Sort

Radix Exchange Sort

re-sort(int array [], int left, int right, int bit)

if left + 1 < right and bit < 32

int mid = partition(array, left, right, bit)

re-sort(array, left, mid, bit + 1)

re-sort(array, mid, right, bit + 1)

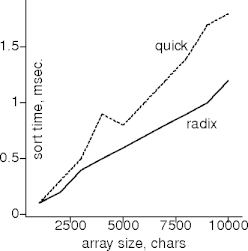

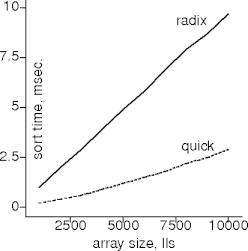

Performance

- Given n b-bit keys, radix exchange sort does

- O(n) work for each partition, and

- O(b) partitions.

- Radix exchange sort does O(nb) worst-case work.

- This is more than O(n) but sometimes less than O(n log n).

- b is independent of n, but is not constant.

Oh Really?

- When b = 8, b < log2 n when n =

256,

but

when b = 64, b < log2 n when n >

1.8×1019.

The Last Step

- We still don’t have a linear sort.

- Let’s go back to the mail room. How do you sort mail?

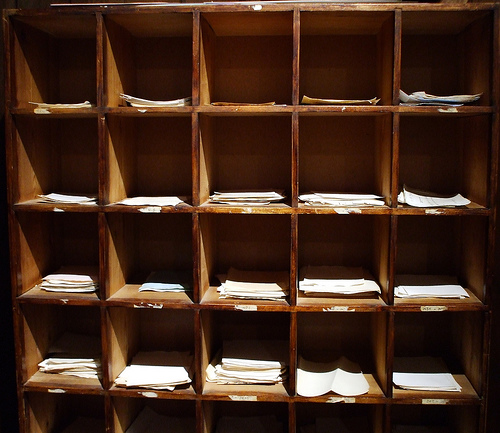

Sorting Mail

|

|

- Each recipient has a mailbox.

- Each piece of mail is placed in the recipient’s mailbox.

|

- This is a linear-time sort.

Requirements

- A linear-time sort has two requirements:

- Enough space to store the sorted values.

- A constant-time addressing scheme to locate values in the space.

- A sort meeting these requirements is known as a bucket (or

distribution) sort.

Data Structures

- An array meets the linear-sort requirements.

- Its size is equal to the number of elements being sorted.

- Indexing is a constant function of the values being sorted.

- Other data structures can also support linear-time searches.

Bucket Sort

- Assume 3-digit decimal numbers.

bucket_sort(int a[], int n)

int counts[1000]

for i = 0 to n - 1

counts[a[i]]++

int j = 0

for i = 0 to 999

while counts[i]-- > 0

a[j++] = i

Summary

- It’s faster when it’s asymptotically faster.

- Quicksort has good average-case behavior, and is tough to beat on those

terms.

- Quicksort also responds to sub-asymptotic improvement tricks.

- An asymptotically better O(n log n) sort requires reliable repeated

division.

- Sub-O(n log n) sorts exist, but require compromises.

- Restrictions on key structure, or key-space size, for example.

References

- Sorting and Searching, Chapter 8, in Java Software Structures,

third ed, by Lewis Chase from Addison Wesley, 2010.

- Sorting Algorithms, Chapter 8, in Data Structures & Problem

Solving Using Java, fourth ed, by Mark Weiss from Addison Wesley, 2010.

-

Sorting out Sorting (≈30 min) by Ronald Baecker,

University of Toronto, 1981 (faster, ≈3 min, but not quiter).

- Algorithm 64: Quicksort by C.A.R Hoare, Communications of the

ACM, July 1961.

- Algorithm 232: Heapsort by J.W.J Williams, Communications of the

ACM, June 1964.

- Engineering a sort function by Jon Bentley and Douglas McIlroy in

Software Practice and Experience, November, 1993.

Credits

|

This page last modified on 2011 April 25.

|

|