- What is concurrency?

- Sequential Computations.

- Communication

- Synchronization

- Operating System Concurrency.

- Concurrency characteristics.

- Explicit and implicit concurrency.

- Synchronous and asynchronous concurrency.

- Concurrency classes.

- Concurrency (via Merriam-Webster):

- The simultaneous occurrence of events.

- Agreement or union in action.

- Life is concurrent.

- Concurrency pops up all over the place.

- Computational structures.

-

while !eof() { read; process; write }

- Computational properties.

- Why bother?

- Concurrency can be "more natural" than non-concurrency.

- Concurrency can be faster than non-concurrency.

- Doing n things with one or n people.

- A sequential computation is

- an instruction list,

- a data set, and

- a program counter.

- The instruction list is the computation's program.

- The data set is the computation's state.

- The data set consists of global, local, and heap variables.

- The program counter and a few other odds and ends are occasionally

included in a computation's state.

- The program counter the computation's thread of execution.

- This is all we care about sequential computations.

- The instructions and the state are the important parts.

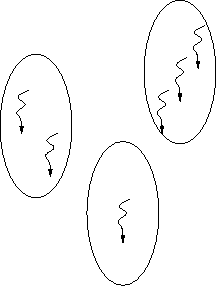

- A concurrent computation is a sequential computation with more than one

thread of execution.

- A multi-threaded computation.

- Each thread executes at its own rate.

- Each thread shares the same state.

- A concurrent computation can also consist of more than one sequential

computation.

- Each may be multi-threaded or not.

- That's it? A bunch of threads?

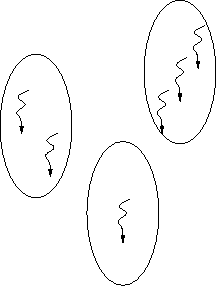

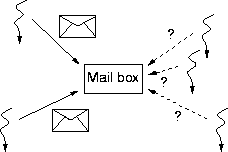

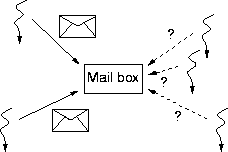

- The constituent computations must communicate.

- Not all the time, and not necessarily regularly.

- But at least once, and maybe repeatedly.

- The amount of communication is important.

- Communication is slower than computation.

- The kind of communication is important.

- Intra-computation communication is much faster (x100) than

inter-computation communication.

- Threads and communication. Anything else?

- What can go wrong with communication?

- No senders, too many receivers, no receivers.

- Successful communication requires synchronization.

- And there's the rub.

- Concurrency needs communication.

- Communication needs synchronization.

- Synchronization kills concurrency.

- OSs have long been involved with concurrency.

- Ad hoc methods initially.

- Eventually more formal approaches.

- OSs must tame concurrency for itself.

- OSs must make concurrency available for users.

- Sequential parts:

- Main CPU, device controllers.

- Communication parts:

- Registers, memory-mapped I-O.

- Synchronization parts:

- Sequential parts:

- Communication parts:

- Shared memory, messages, files.

- Synchronization parts:

- Signals, semaphores, blocking I-O.

- How do you indicate concurrent execution?

- The programmer tells the compiler.

- Explicit (or imperative) concurrency.

-

dopar { read // process // write }

- The compiler tells the programmer.

- Implicit (or declarative) concurrency.

-

(1 + 1)*(2 + 2)

- Implicit is better than explicit.

- There's too much bookkeeping for humans.

- Explicit is more practical than implicit.

- Concurrency is too hard for compilers.

- These facts should make you nervous.

- A marching band, a marathon. What's the difference?

- A marching band is synchronous.

- Everybody steps at the same time.

- Everybody starts and stops at the same time.

- A marathon is asynchronous.

- Nobody steps at the same time.

- Nobody starts and stops at the same time.

- Synchronous concurrency runs in lock-step.

- The same set of instructions.

- Synchronous concurrency needs hardware.

- Asynchronous concurrency free-wheels.

- The execution ratio of any two constituent computations is arbitrary.

- This is as close to time as we're going to get.

- Synchronous concurrency is easy to program but possibly slow.

- Each knows where everybody else is.

- Each can only go as fast as the slowest person.

- Asynchronous concurrency is hard to program but possibly fast.

- Nobody knows where hardly anybody is.

- Each goes at their best pace.

- This course is about asynchronous concurrency.

- Communication speed distinguishes concurrency classes.

- Parallel programs have fast (nanosecond) communication.

- Scientific computation.

- Vector, pipelined, and other CPU architectures.

- Concurrent programs have medium (microsecond) communication.

- Multi-threaded and multi-process computations.

- Uni- and multi-processor systems.

- Distributed programs have slow (millisecond and up) communication.

- Client-server and peer-to-peer systems.

- The Internet and other networks

- Concurrency is everywhere.

- Concurrency is sequential computations, and communication and

synchronization.

- Balancing computations, communication, and synchronization is the trick.

- Concurrency can be explicit or implicit.

- Explicit is hard but fast, implicit is easy but slow.

- Concurrency can be synchronous or asynchronous.

- Asynchronous is hard but fast, synchronous is easy but slow.

- Parallel, concurrent, and distributed computations.

- The difference is the communication speed.

This page last modified on 27 May 2003.