Operating Systems Lecture Notes

7 March 2012 • Virtual Store

Outline

- Big programs and overlays.

- Virtual Store and addressing.

- Address translation.

- Page management.

Big Programs, Small Storage

- We still need to run programs bigger than primary store.

- Overlays use programmer-defined fragments.

- Virtual store and paging use system-defined fragments.

- Both the hardware and os are invoved.

- But not programmers, unless they want to.

Big Programs

- Big programs excede physical store.

- They use addresses from N up.

- Cut big program into pieces.

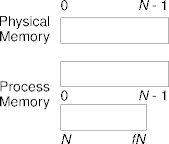

Big Addresses

|

|

|

- Rather than adding a base, subtract it.

- Or just compile the second half with base 0.

Relocation

- A program is twice as long as physical store.

- Cut the program in half.

- Relocate the top-half addresses by -N.

- Now both halves of the program have addresses in the range 0 to N - 1.

- This works only when each half is independent of the other.

- Addresses are recycled, and so are ambiguous.

Management

- Transitions between halves is done by system call.

- The OS

- Writes the old half to disk to save modified data.

- Optional if no data was written

- Reads in the new half, resets the process state, and returns.

- Writes the old half to disk to save modified data.

- This is expensive.

Overlays

- Overlays generalize the two-part program.

- An overlay is a program fragment relocated to a

physical-storage segment known as an overlay region.

- Several overlays may map into the same physical-storage segment.

- A program may comprise several overlay regions.

Overlay Definitions

- The programmer defines the overlays and overlay regions.

- Usually via program structure and linker incantations.

- The compiler creates them.

- The OS implements them.

- There’s no protection against mis-alignment or mis-referencing.

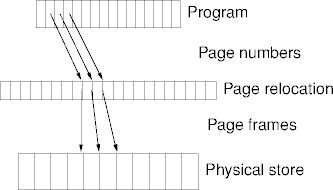

Virtual Store

- Make overlays small and regular.

- Programs are automatically fragmented into pages.

- Some address translation trickery provides virtual storage.

- Virtual addresses - completely decouple physical and process addresses.

- Virtual memory fragments - pages.

- Virtual addresses - page number, page offset.

Paging

- Pages are uniform overlays.

- Memory management unit (MMU) - virtual to real address mapping.

- Translation look-aside buffer (TLB) - speeding up the MMU.

- Inverted page tables.

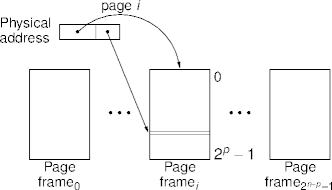

Pages

- The process address space is divided into pages.

- Physical memory is divided into page frames.

- Page frames and pages are (usually) the same size.

- Usually around 1 to 4 kbytes (210 to 212 bytes).

- A small multiple of the disk-block size.

Virtual Store

- Virtual store is like physical store, except that virtual store

doesn’t exist.

- A conceptual fixed-length array of units.

- VS units are the same size as PS units.

- N, the virtual-store size, is usually vastly larger than

physical-store size.

- It can also be smaller, or the same size.

- N is usually 232 (4.2 gig) or 264.

Example

Virtual Addresses

- A virtual address is an index in the conceptual array.

- Pages give virtual addresses a structure not found in physical addresses.

- A virtual address identifies both

- the page containing the unit, and

- the offset of the unit within the page.

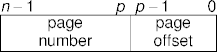

VA Structure

- Let page size be 2p units (10 ≤ p ≤ 12 say).

- Let a virtual address be n bits (n = 32 or 64, say).

- The page offset is the leftmost p bits of a virtual address.

- The page number (or index) is the rightmost (n - p) bits of the virtual address.

Management

- Virtual addresses have to be mapped to physical addresses.

- Page tables map from virtual address to page frames.

- Issues

- Page table size - the trade-off.

- Translation speed - as fast as possible.

- Context switch overhead - as low as possible.

Page Tables

- Single level page tables.

- Registers - small and expensive but fast.

- Main store - big and cheap but slow.

- Multi-level page tables - 219 8k pages in 32-bit address space.

- Page table entries - page frame number, present-absent bit, protection bits, modified-referenced bits, cache bit, pinned bit.

Example

Translation Look-Aside Buffer

- The MMU has to be fast and small to keep costs down.

- Speeding up the MMU.

- Caching page table to page frame mappings

- Hardware or software.

- Prefetching - process stack + code.

TLB Example

- For example, consider 50 ms TLB lookup, 750 ms store access.

- TLB hit: 50 tlb + 750 store access

- TLB miss: 50 tlb + 750 page table access + 750 store access

- A 8-16 entry TLB has a 80-90% hit ratio.

Inverted Page Tables

- 64-bit addresses with 16k page frames, 248 = 281*1012 pages.

- Page frame → (proc, page no) mappings

- 232 mbyte main store → 216 216 kbyte page frames.

- Use a TLB to speed-up mapping.

- Use hashing on addresses when the TLB misses.

Page Replacement

- What to do when good addresses go bad?

- Page faults.

- Random page selection - yank any in-store page; not very efficient.

- Optimal page replacement - furthest use in the future.

Used-Based Replacement

- Toss pages not recently used.

- Modified & referenced bits.

- Periodically clear reference bits to find old, unreferenced pages

- Toss not referenced before referenced pages, and not modified before modified pages.

- Hardware implementation - still need to clear reference bits.

- Software - interrupts and protection.

Related Strategies

- Fifo - oldest out next; no reference or modify checking.

- Second chance - oldest unreferenced out.

- Clock page replacement - second chance or fifo as a ring.

LRU Replacement

- The past as prolog.

- Page queue ordered by access time.

- Counters-based timers.

- Matrix-based timers

LRU Analysis

- LRU simulations.

- Not frequently used adds a read bit to a counter; doesn’t forget.

- Aging shifts the read bit in from the right.

- LRU problems.

- There is no intra-interval granularity.

- Resolution is finite and small.

Design Issues

- working set model

- local vs. global page allocations

- page size

- the store-management interface

Working Set Model

- Demand paging.

- Locality of reference.

- Working set.

- Thrashing

- Prefetch

- Using counters to compute the working set.

- Adding the working set to paging algorithms - wsclock.

Local vs. Global Page Allocations

- Fractional vs. weighted total allocations.

- Managing by page-fault frequency.

- Swapping is still useful to handle global thrashing.

Page size

- Set by hardware or software - alpha 8, 16, 32, 64kb pages.

- Internal fragment, small segments argues for small pages.

- Small page tables, paging overhead argues for large pages.

- Page table overhead on context switches.

Summary

- Overlays fold large programs into small physical store.

- Pages are small, regular, system maintained overlays.

- Page address structure leads to virtual store.

- Hardware assist makes for dynamic relocation.

- Page management trades-off allocation for residency.

| This page last modified on 2012 March 7. |