Operating Systems Lecture Notes

2014 October 23 • Segmented and Paged Storage

Outline

- Segmentation

- Paging

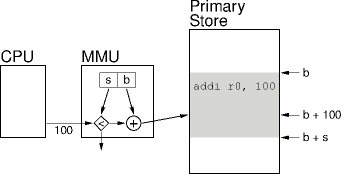

Relocation Illustrated

Areas of Interest

- A process’s address space regions have different access

behaviors.

- Code is read and executed, but not written.

- Data are read and written, but not executed.

- Global data are static read-write.

- Constant data are static read-only.

- Heap and stack data are dynamic read-write.

- Distinguishing these regions opens up opportunities.

Process Segments

- Segments are address-space regions of uniform access behavior.

- Segments can be packed into a contiguous address space.

- Or the address space can be a set of segments.

|

|

|

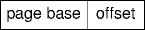

Segment Addresses

- Contiguous segments use relocatable (base + offset) addresses.

- Noncontiguous segments also use relocatable addresses, but each

segment has a different base and limit.

|

|

|

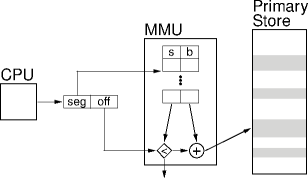

Segment Addressing

- The MMU becomes a set of segmentation registers, one per

segment.

Segment Allocation

- Each address-space segment is allocated as usual.

- Simpler because smaller, but more of them.

- Noncontiguous segments can use noncontiguous allocation.

- Dynamic (on-demand) segment loading doesn’t load unused segments.

- MMU segmentation register look-up fails.

Access Protection

- Segments are tagged with the allowed access types.

- Reading, writing or executing.

- Access-type bits added to the segment registers.

- CPU issues logical addresses and access types.

- Mismatches in issued and allowed access types cause a protection violation.

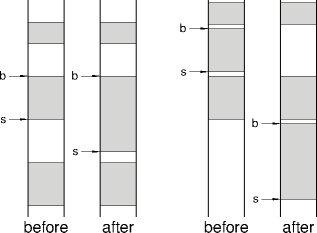

Dynamic Segments

- Increasing a segment’s size is straightforward.

- Move the segment to a larger free-space chunk.

- Adjust the segment’s base and limit registers.

- If the segment’s adjacent to a large-enough free-space chunk, skip step 1.

- Contiguous address spaces can be adjusted in the same way.

- With more copying.

Size-Change Examples

Segment Problems

- The segment-count/segment-size trade-off can be tricky.

- Many small segments or few huge segments.

- More allocations per process.

- More smaller allocations promote external fragmentation.

- Handling segmented addresses is tricky and inconvenient.

- Swapping gets messy and more inefficient.

Improving Segments

- Noncontiguous is good: non-picky allocation.

- Variable size is bad: too much housekeeping and overhead.

- Split the difference: use fixed-sized segments called pages.

- Pages are small, from 512 bytes to low Mbytes.

- Typically low kbytes — 1, 2, 4 kbytes.

- A process’s address space is an integral number of fixed-sized pages.

Paged Primary Store

- Primary store is divided into page-sized slots called page frames.

- Page allocation is trivial: use any open page frame.

- Allocate off the head of the page-frame free list.

- External fragmentation disappears.

- Or rather, is replaced by internal fragmentation.

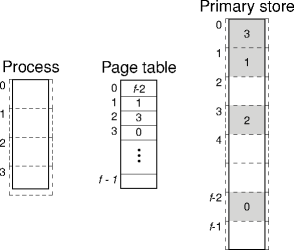

Paged Addressing

- Paged addressing is just like segmented addressing.

- Just change “segment” to “page”.

- Just change “segment” to “page”.

- The segment registers become the page table, a mapping from page numbers to page frames.

Page Addressing Example

MMU in Paging

- The MMU still translates logical to physical addresses.

- Using the page table instead of segment registers.

- Pages and page-table entries can be tagged with access bits.

- May induce more internal fragmentation.

- Per-segment length checks are gone.

Page Count Problems

- Large (Gbyte) primary stores and small (kbyte) pages lead to large page tables.

- 2 Gbyte/2 kbyte = 220 ≈ 106 page-table entries.

- Megabyte-capable MMUs are way too expensive to contemplate.

- Even modestly sized page tables are too expensive to swallow whole.

- How should large page-table sizes be handled?

Large Page Tables

- If the page table can't fit in the CPU, it has to go in primary store.

- The CPU page-table register points to the page table.

- Each access takes two accesses.

- One for the page-table access, the second for the access.

- Doubling storage access time is unacceptable. How to fix?

Caching

- Remember (page number, page frame) pairs on-chip.

- When mapping, look on-chip first, then go to the page table if needed.

- (Number, frame) pairs are stored in a fast, small, associative on-chip

cache.

- The translation look-aside buffer (TLB).

- Fast: single-digit cycles; small: 4 to 256 entries.

- Associative: parallel search.

Page Protection

- Pages can be tagged with access (rwx) bits.

- Page-table entries contain allowed accesses.

- Page-table entries may also have a validity bit.

- No frame for this page.

- Illegal access past the ends.

- Holes in the process address space.

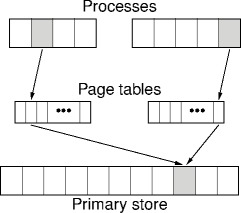

Sharing Pages

- Two or more processes can map the same page frame into their address

spaces.

- The resulting pages are shared among the processes.

- Processes can use shared pages for IPC.

- OSs can use page sharing to share common code among processes.

- Page-table hacks are fun and fast.

- The results are usually fun and fast too.

Sharing Example

Big Store

- Modern address spaces can be huge, 248 (≈ 1014) to 264 (≈ 1019) bytes.

- The resulting page tables are ridiculously large, even for process

address spaces.

- 248/212 = 236 ≈ 64 billion entries.

- Not too large for primary store, but otherwise useless.

- What happens when address spaces get huge?

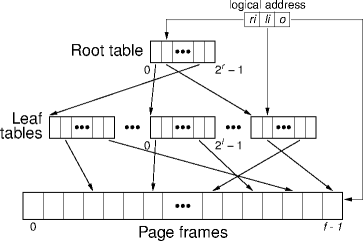

Paging Hierarchy

- When the page table gets too large, cut it down to size.

- Turn a 48-bit address space into a set of 4G (232) chunks.

- There are 248/232 = 216 ≈ 64k chunks.

- The result is a hierarchical page table.

- The root page table indexes leaf page tables.

- Each leaf page table handles a 4G chunk.

- Repeat as needed (or until comfortable).

Hierarchy Example

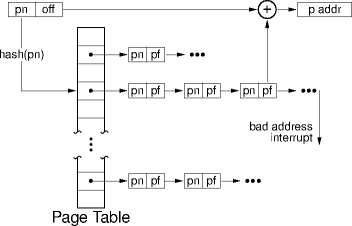

Hashed Page Tables

- Replace the page table with a hash table.

- Hashed page-number keys.

- The bucket values are page frames.

- Collisions resolved by matching page numbers.

- Clustering groups page numbers into a single bucket.

- Good when the process address space is sparse.

Hash Table Example

Fun Facts

- Physical store is smaller than logical store.

- Physical store was rarely 232 and is never 248 or 264 bytes.

- Far fewer page frames than pages.

- There is one page frame i, but many page is.

- As many page is as processes.

- And as many page tables.

- So what?

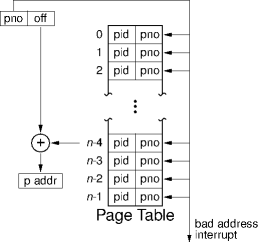

Inverted Page Tables

- Use page-frame numbers as page-table indices, not page numbers.

- An inverted page table.

- An entry is a (proc. id, page no) pair, or is empty.

- There is one inverted page table, not one per process.

- No context-switch overhead.

- An inverted page table is smaller than a page table.

Inverted Table Example

Summary

- Segments recognize regions of common process access.

- Segment allocations provides flexibility and convenience.

- Pages are fixed-sized segments, independent of use.

- Pages generalize segment flexibility.

| This page last modified on 2014 October 25. |